An Energy-efficient Deep Belief Network Processor Based on Heterogeneous Multi-core Architecture with Transposable Memory and On-chip Learning

Abstract

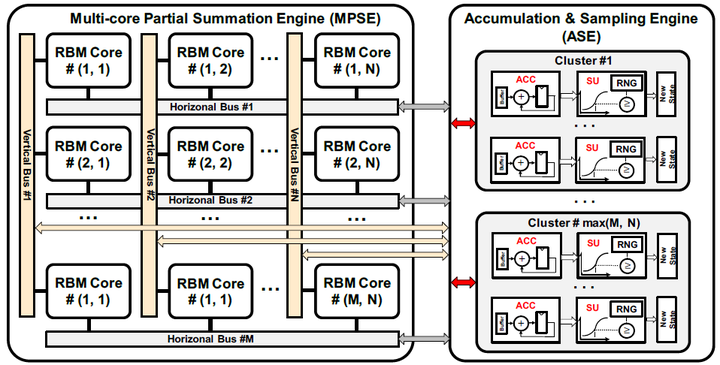

With the growing interest of edge computing in the Internet of Things (IoT), Deep Neural Network (DNN) hardware processors/accelerators face challenges of low energy consumption, low latency, and data privacy issues. This paper proposes an energy-efficient processor design based on Deep Belief Network (DBN), which is one of the most suitable DNN models for on-chip learning. In this study, a thorough algorithm-architecture-circuit design optimization method is used for efficient design. The characteristics of data reuse and data sparsity in the DBN learning algorithm inspires this study to propose a heterogeneous multi-core architecture with local learning. In addition, novel circuits of transposable weight memory and sparse address generator are proposed to reduce weight memory access and exploit neuron state sparsity, respectively, for maximizing the energy efficiency. The DBN processor is implemented and thoroughly evaluated on Xilinx Zynq FPGA. Implementation results confirm that the proposed DBN processor has excellent energy efficiency of 45.0 pJ per neuron-weight update, which has been improved by 74% against the conventional design.